OpenWebUI is a self-hosted, web-based interface that allows you to run AI models entirely offline. It integrates with various LLM runners, such as OpenAI and Ollama, and supports features like markdown and LaTeX rendering, model management, and voice/video calls. It also offers multilingual support and the ability to generate images using APIs like DALL-E or ComfyUI

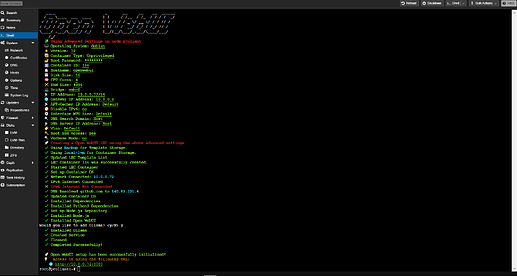

To create a new Proxmox VE Open WebUI LXC, run the command below in the Proxmox VE Shell.

bash -c "$(wget -qLO - https://github.com/community-scripts/ProxmoxVE/raw/main/ct/openwebui.sh)"

Default settings

CPU: 4vCPU

RAM: 4GB

HDD: 16GB

Default Interface: IP:8080